The Space-Native Data Center: A First Principles Design for 2030

AI is consuming energy at a rate that Earth's grids can barely sustain. The solution is not to build more power plants - it is to go where the power is infinite and the cooling is free.

I spent several days modeling a 100 Megawatt Orbital Compute Cluster with Gemini 2.5 Pro (and later Gemini 3 Pro Preview). We tore down the data center to its atomic units - physics, economics, and materials - to design a rig that lives in the vacuum. What started as idle speculation turned into a surprisingly rigorous engineering exercise.

Here is the blueprint for the "Star-Tape" Inference Engine.

Part I: The Energy Wall

The Problem No One Wants to Talk About

We are hitting a wall. Not a compute wall - a power wall.

Training GPT-4 required an estimated 50 GWh of electricity. GPT-5 will require more. Each generation of frontier models demands roughly 10x the compute of the last. At some point, the grid cannot keep up.

"There's a 190 GW gap between AI ambition and U.S. grid reality." - Philip Johnston, Starcloud

The hyperscalers know this. Google, Microsoft, and Amazon are signing nuclear deals, acquiring geothermal rights, and building natural gas peaker plants just to keep the lights on. But even these measures are Band-Aids on a fundamental problem: Earth has finite, geographically constrained power sources.

Meanwhile, 150 million kilometers away, there is a fusion reactor outputting 3.86 x 10^26 watts. We call it the Sun.

Why Space Changes Everything

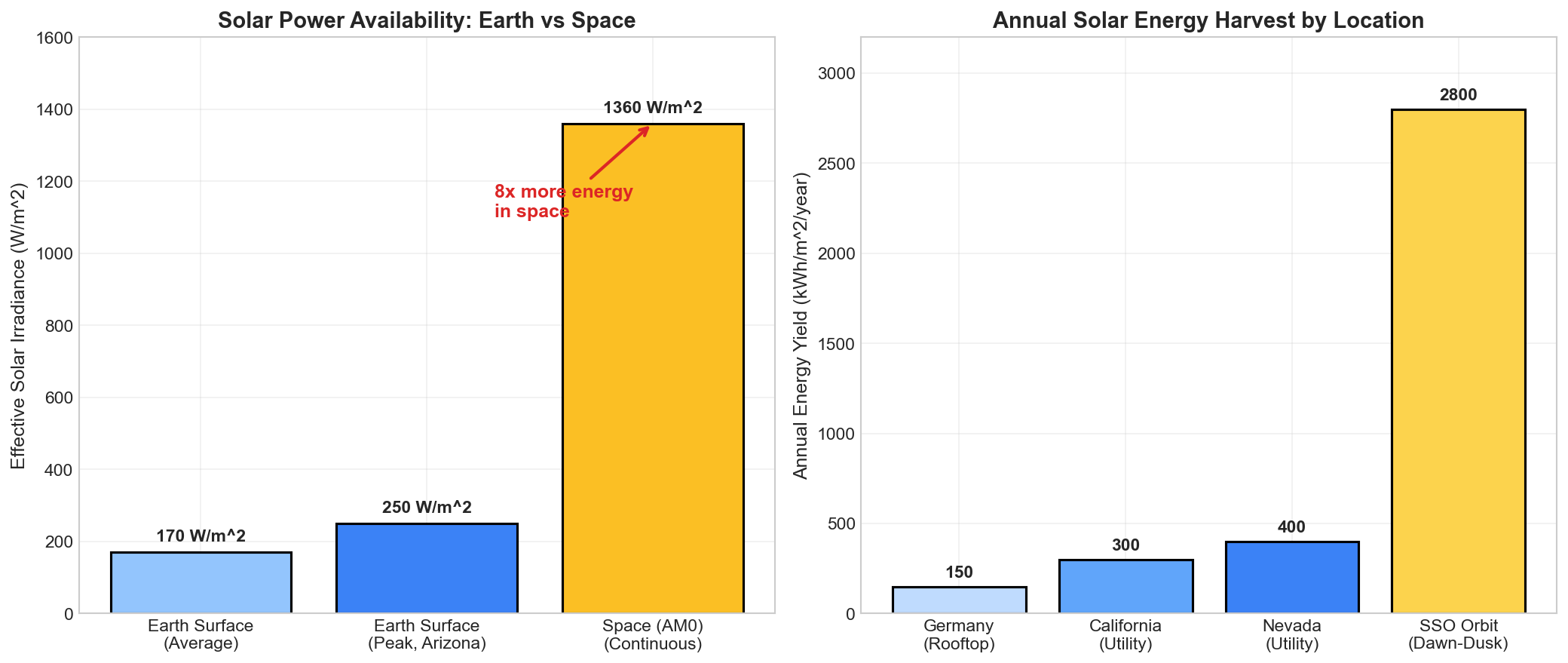

The arithmetic is simple:

| Location | Effective Solar (W/m^2) | Availability |

|---|---|---|

| Germany (rooftop) | 120 | Intermittent |

| Arizona (utility) | 250 | Daytime only |

| Space (AM0, SSO) | 1,360 | 24/7/365 |

In Sun-Synchronous Orbit (SSO), specifically the "Dawn-Dusk" configuration at 600km altitude, a satellite rides the terminator line between day and night. It never enters Earth's shadow. The solar panels face the Sun continuously. The radiators face deep space continuously.

"You get 8x more energy in space than on Earth for a given area of solar panel." - Jeff Bezos

This is not speculation. This is orbital mechanics.

Part II: The Economic Trigger

The Launch Cost Cliff

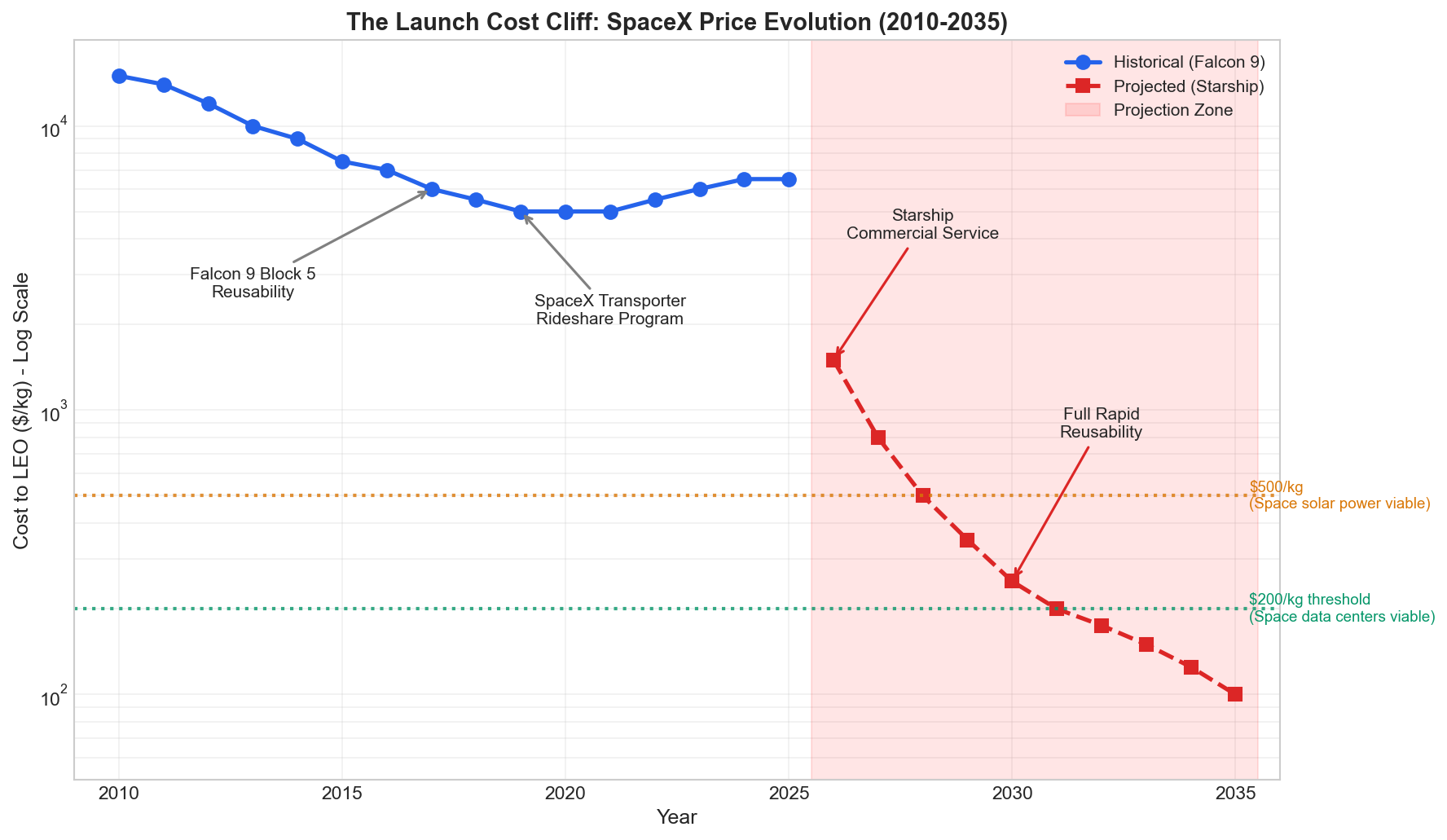

Space has always been "too expensive." Launch costs of $10,000 per kilogram made anything beyond communications satellites economically insane. But we are witnessing a discontinuity event.

SpaceX has systematically reduced launch costs through reusability:

- 2015: ~$10,000/kg (Falcon 9, expendable)

- 2019: ~$5,000/kg (Falcon 9, reusable + Transporter rideshare)

- 2024: ~$6,500/kg (Transporter-11, current pricing)

- 2027+ (projected): <$500/kg (Starship commercial service)

- 2030+ (projected): <$200/kg (Starship at scale)

"In not more than 5 years, the lowest cost way to do AI compute, will be in space." - Elon Musk (November 2025)

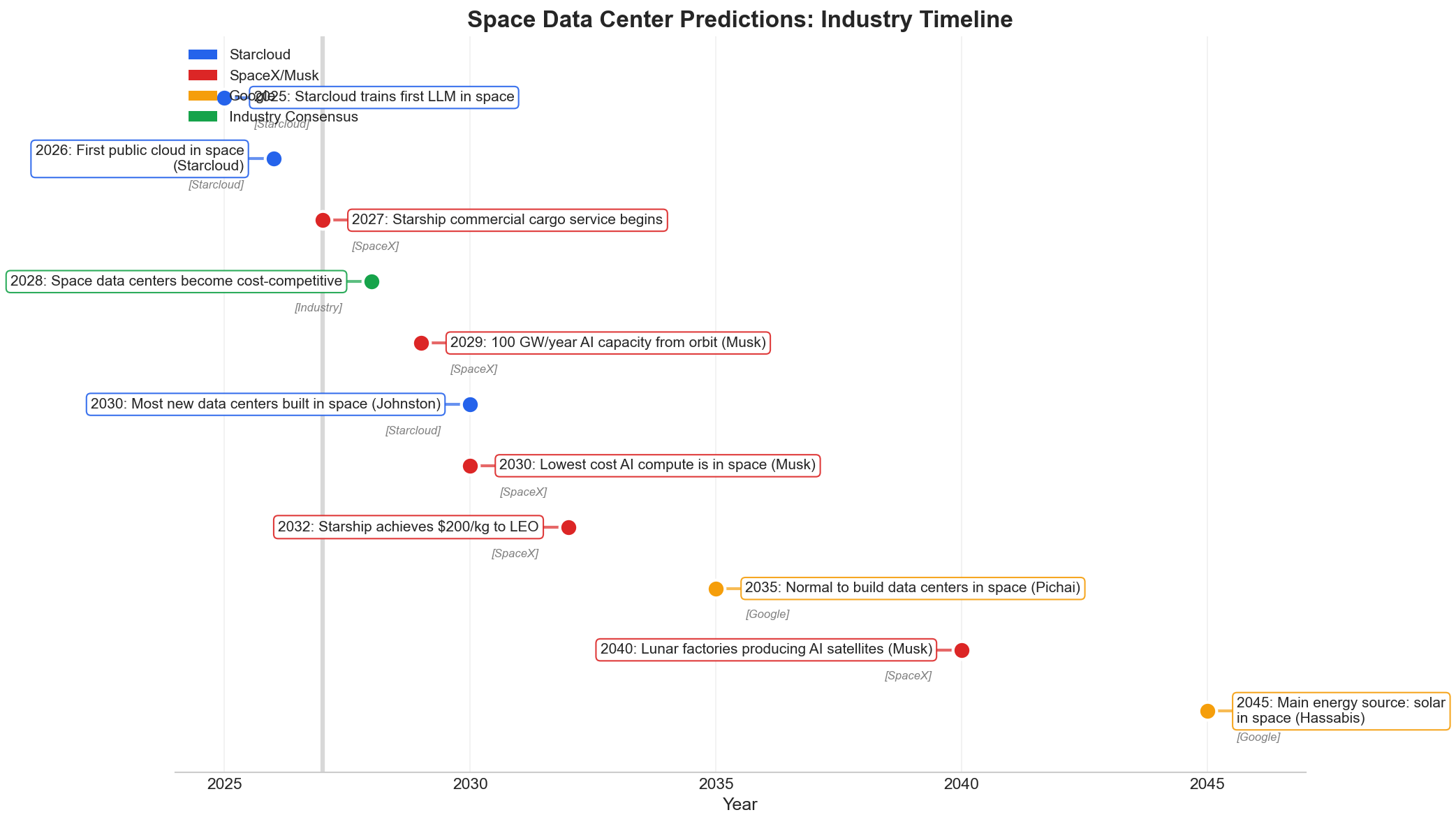

Part III: What the Industry Leaders Are Saying

This is not a fringe idea anymore. In the past 18 months, the space data center concept has gone from Twitter joke to boardroom conversation.

Elon Musk (SpaceX, xAI, Tesla)

"Starship should be able to deliver around 300 GW per year of solar-powered AI satellites to orbit, maybe 500 GW. The 'per year' part is what makes this such a big deal. Average US electricity consumption is around 500 GW, so at 300 GW/year, AI in space would exceed the entire US economy just in intelligence processing every 2 years."

Sundar Pichai (Google)

"There's no doubt to me that in 10 years, we will view it as normal to build data centers in space."

Jeff Bezos (Blue Origin)

"We will build giant gigawatt data centers in space."

Demis Hassabis (Google DeepMind)

"In 20 years, the main energy source will be solar powering data centers in space."

Part IV: The Physics Deep Dive

Solar Power Generation

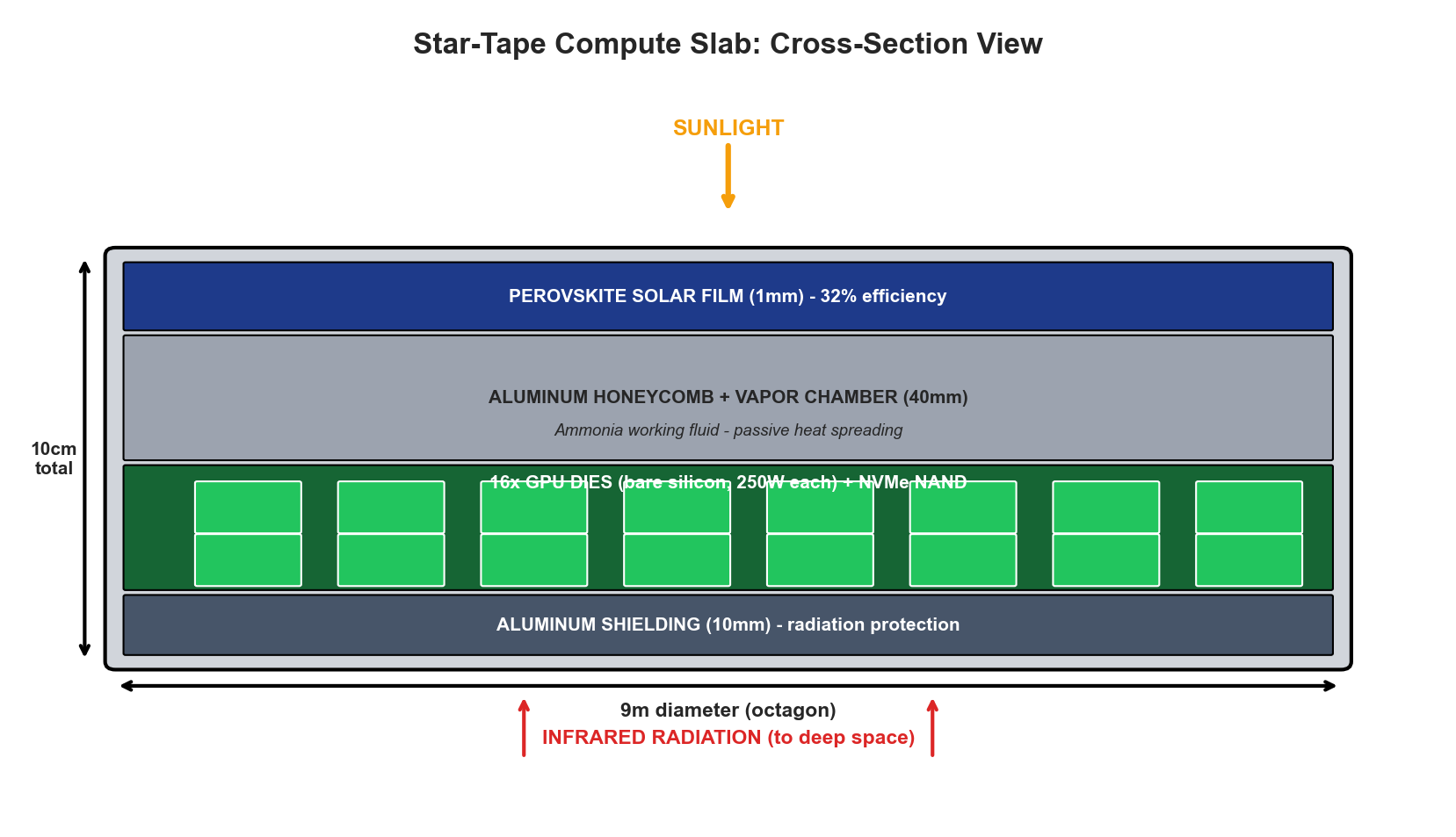

The solar constant at Earth's orbital distance is 1,360 W/m^2 (AM0 spectrum). This is the raw energy hitting every square meter of area perpendicular to the Sun.

Conversion efficiency:

- Standard terrestrial silicon: 20-22%

- Space-grade multi-junction (GaInP/GaAs/Ge): 32% (James Webb, Mars rovers)

- Advanced Perovskite tandem (projected): 35-40%

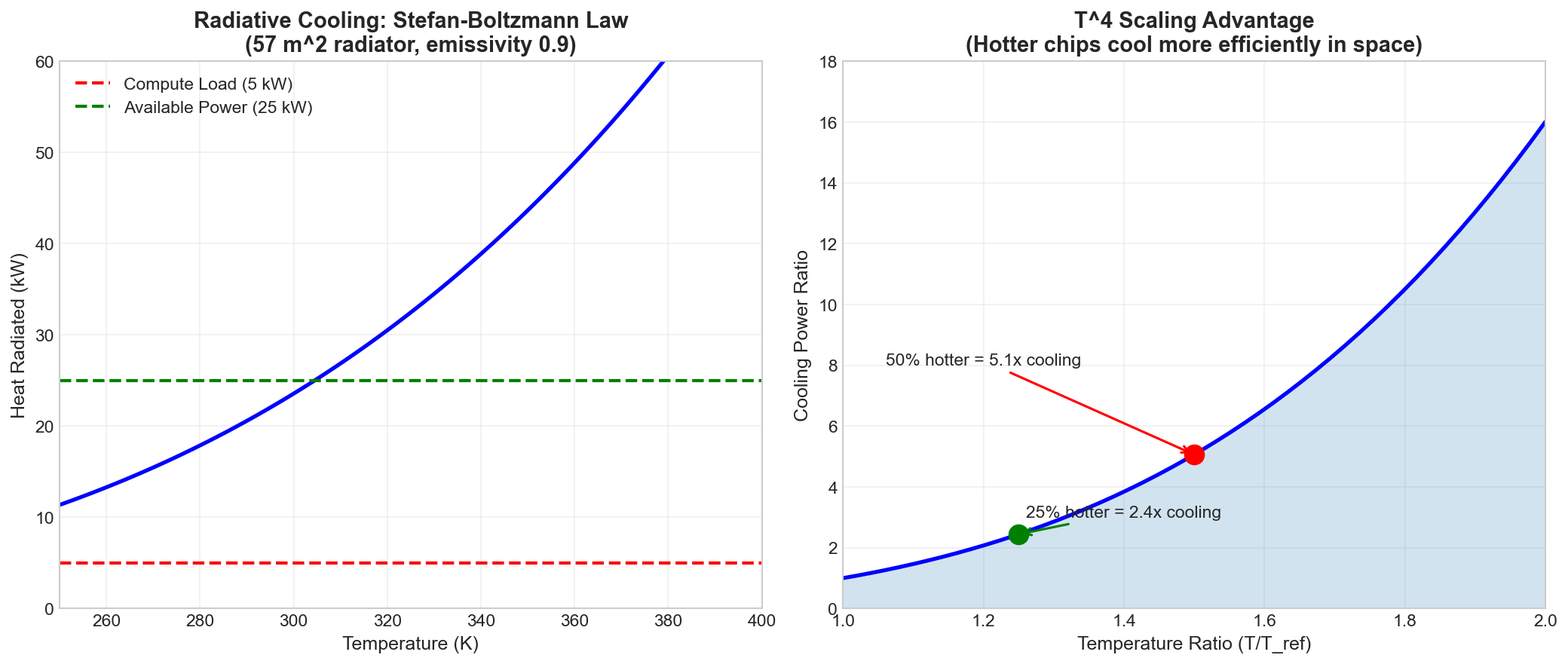

Thermal Management: The Stefan-Boltzmann Advantage

In space, there is no convection. Heat leaves only through radiation, governed by the Stefan-Boltzmann law:

P = epsilon * sigma * A * T^4The T^4 term is the key insight. As temperature increases, cooling power increases with the fourth power of temperature. This means running chips hotter in space is advantageous - they shed heat more efficiently.

Part V: The Star-Tape Architecture

Design Philosophy: Passive Over Active

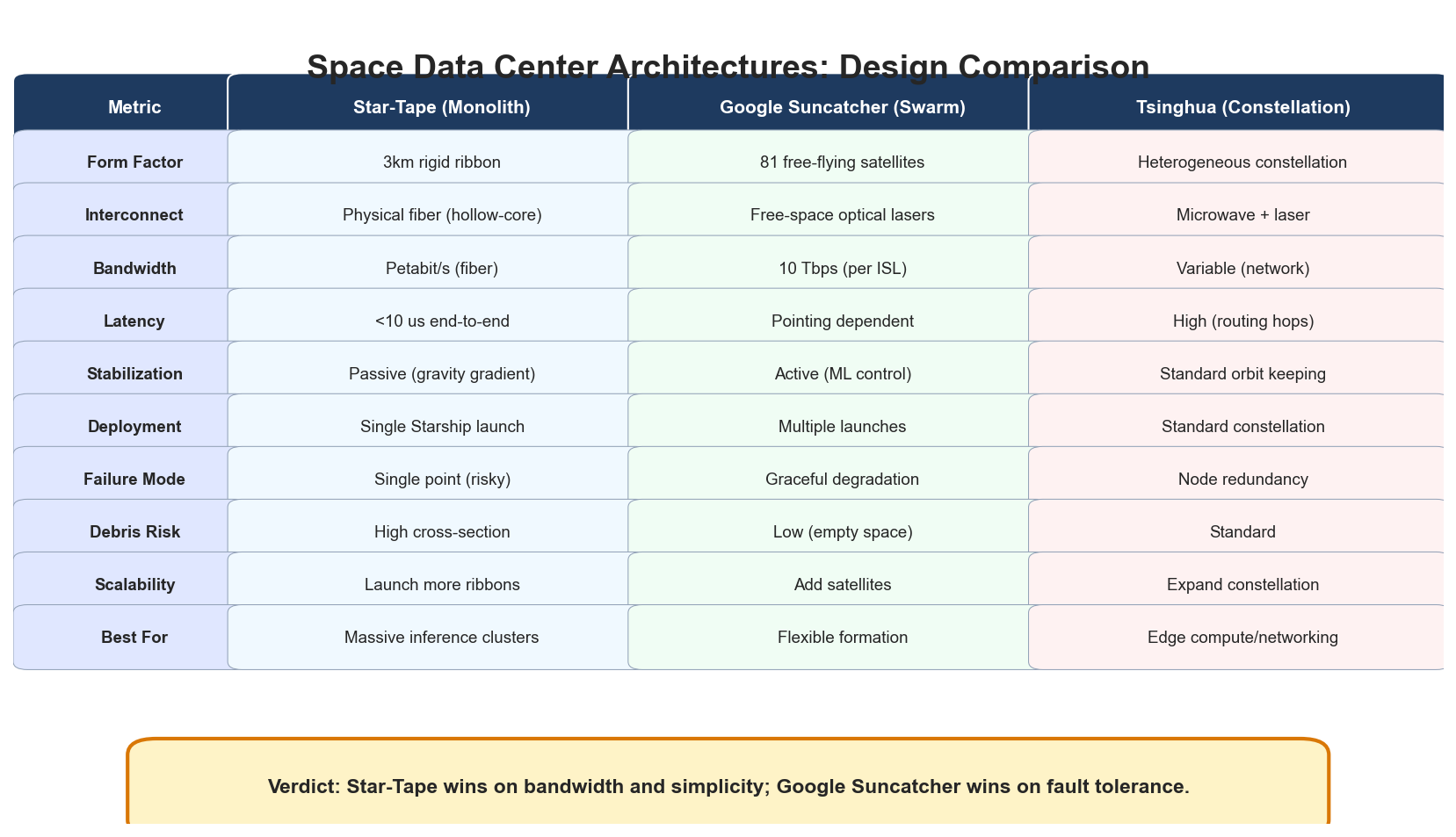

The core insight: We don't need a swarm of free-flying satellites. We need a physically connected structure.

Our approach: The Rigid Monolith. We deploy a single, physically connected structure - a 3-kilometer ribbon of compute slabs linked by fiber optic cable. No formation flying. No laser pointing. No active control loops.

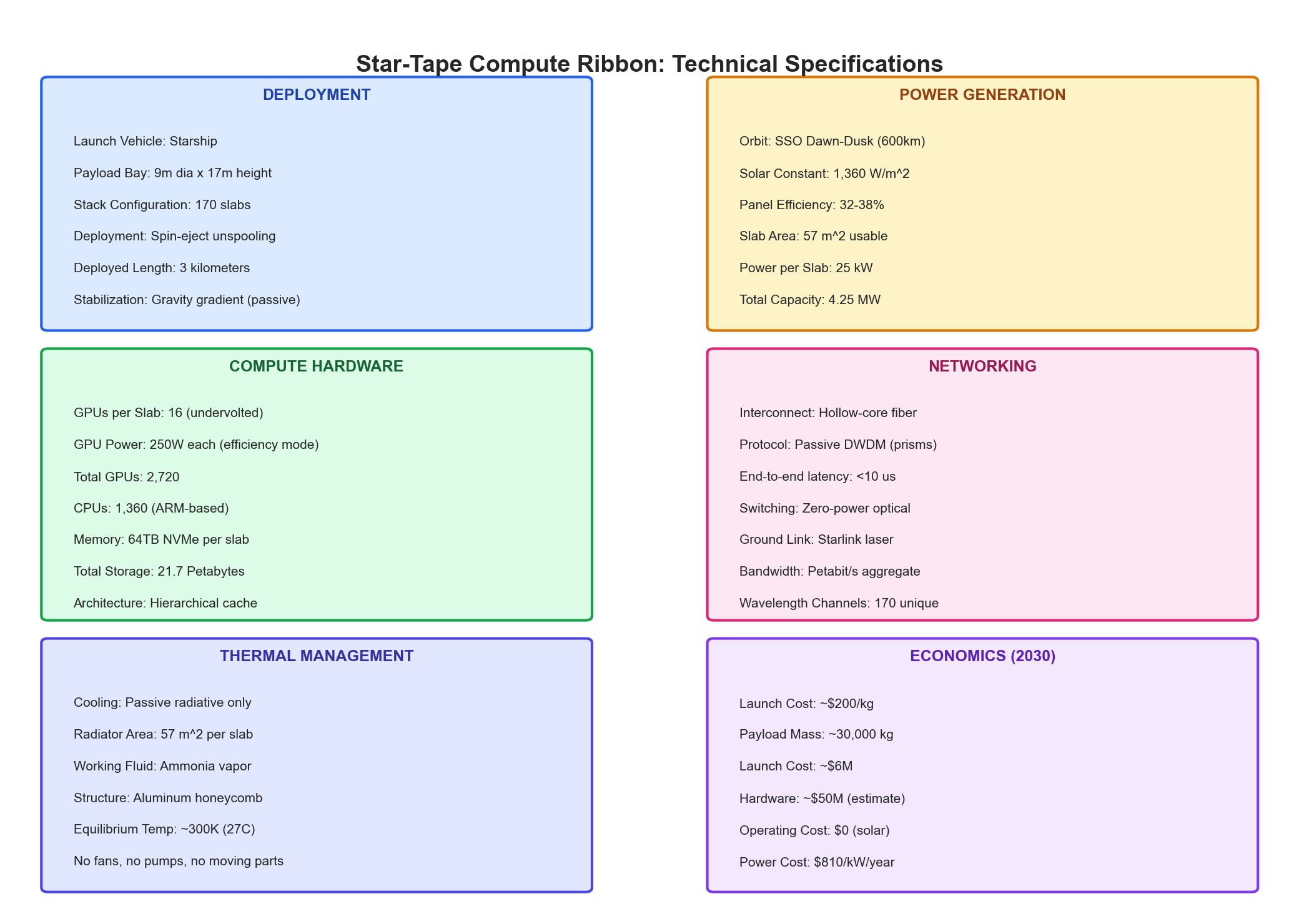

The Form Factor: Stacked Octagons

The Starship payload bay is a cylinder: 9m diameter, 17m height. We stack 170 octagonal slabs (each 10cm thick) inside this volume.

Deployment sequence:

- Starship enters a controlled flat spin

- The stack is released

- Angular momentum causes the slabs to separate

- The flexible fiber "spine" unspools

- Gravity gradient stabilization keeps the ribbon taut

- Result: A 1.5-3km long rigid ribbon floating in orbit

The Compute Hardware

We strip everything unnecessary. No fans. No chassis. No PCIe slots. Bare silicon bonded to aluminum.

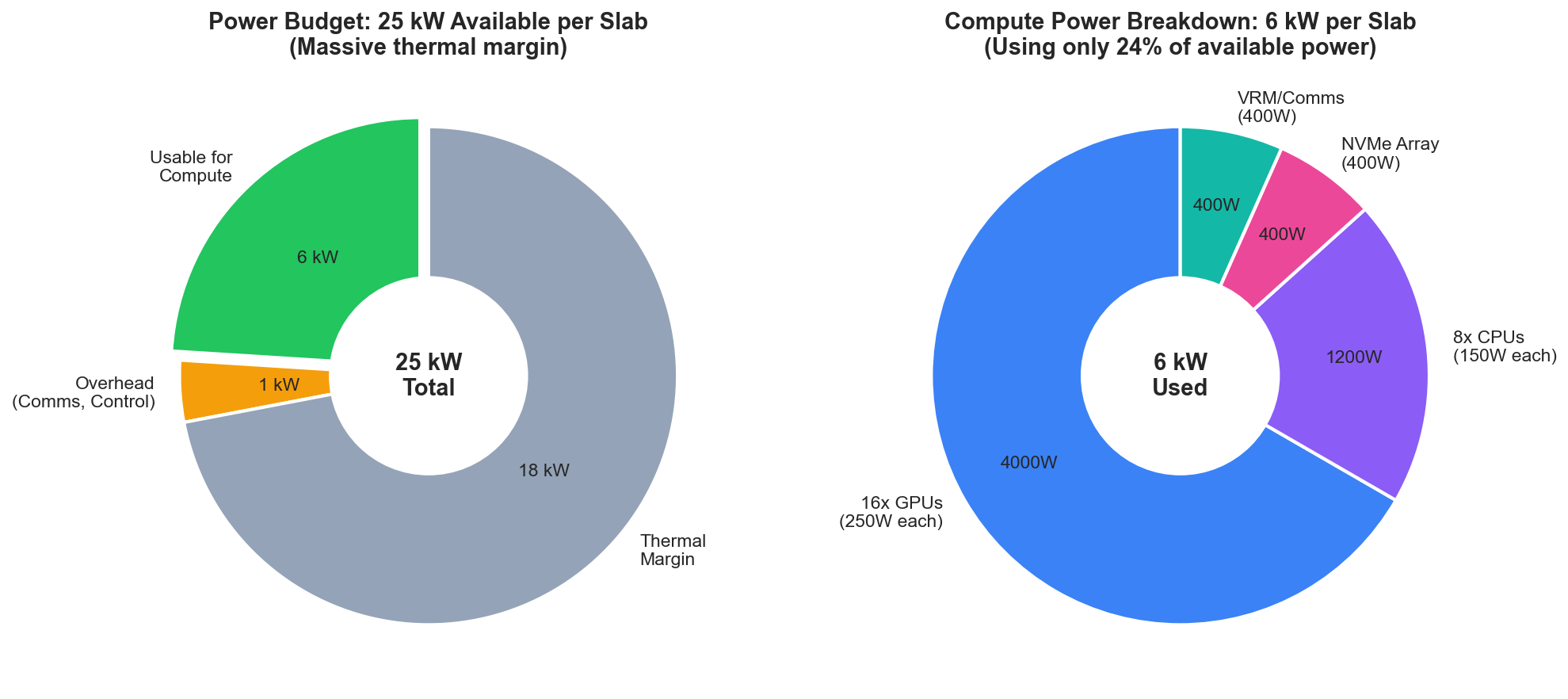

Per slab (170 total):

- 16x GPU dies (Blackwell-class, undervolted to 250W for efficiency)

- 8x ARM CPUs (Grace-class, 150W each)

- 64TB NVMe storage (16x 4TB SSDs)

- Flex-circuit "carpet" instead of traditional motherboard

The Totals (One Starship Launch)

| Metric | Value |

|---|---|

| Slabs | 170 |

| GPUs | 2,720 |

| CPUs | 1,360 |

| NVMe Storage | 10.9 Petabytes |

| Power Capacity | 4.25 MW |

| Ribbon Length | 1.5 km |

| Form Factor | 9m x 9m x 17m (stowed) |

This is roughly equivalent to 8 racks of H100s in a single Starship payload - with zero ongoing electricity cost.

Part VII: Comparison With Academic Proposals

Google "Project Suncatcher"

Google DeepMind published their space compute paper in 2025. Their approach uses 81 free-flying satellites in a 1km formation with free-space optical lasers for interconnect.

We bypass this entirely with a physical wire.

Part XIV: The Critical Flaw - Why Training in Space is Harder Than I Thought

The Bit Flip Problem

In my original conversation, I glossed over a critical issue: cosmic radiation causes bit flips in memory, and this is catastrophic for training.

For inference, a bit flip corrupts one response. You retry. The user never notices.

For training, a bit flip corrupts a gradient or weight. That corruption propagates through backpropagation. The loss function spikes. The model weights explode to NaN. Your entire training run is destroyed.

The Real Solution: A Shielded Core Module

If we want to do training (not just inference), we need to fundamentally rethink the architecture:

Instead of 170 thin slabs, we need a central shielded "vault" containing the critical compute.

- Solar Collection Ring - Thin panels collecting power, no compute

- Central Vault - A single, heavily shielded cylinder (50cm walls) containing all GPUs

- Radiator Fins - Extended from the vault for thermal dissipation

- Memory Hierarchy - ECC RAM mandatory, RAID 5/6 for storage

Part XVI: The Vision

By 2030, the phrase "cloud computing" will have a new meaning.

The hyperscalers will not be fighting for grid connections in Virginia or water rights in the desert. They will be buying Starship launches by the dozen, deploying compute ribbons into the permanent noon of dawn-dusk orbit.

"Think in terms of Kardashev II and the path becomes obvious." - Elon Musk

A Kardashev Type II civilization captures the majority of its star's energy output. We are nowhere close. But every petawatt of orbital compute is a step toward that asymptote.

The Sun outputs 3.86 x 10^26 watts. Humanity uses 18 x 10^12 watts. We have fourteen orders of magnitude of headroom.

"It is a hard path to 100GW/year of AI in space, but we know what to do." - Elon Musk

The only question is how fast we climb.

References

- Google DeepMind - "Towards a future space-based, highly scalable AI infrastructure system design" (2025)

- Tsinghua University - "Towards Space-Based Computing Infrastructure Network" (2025)

- Starcloud - First LLM training in space (2024)

- SpaceX - Starship development and Starlink constellation data

Published December 2025