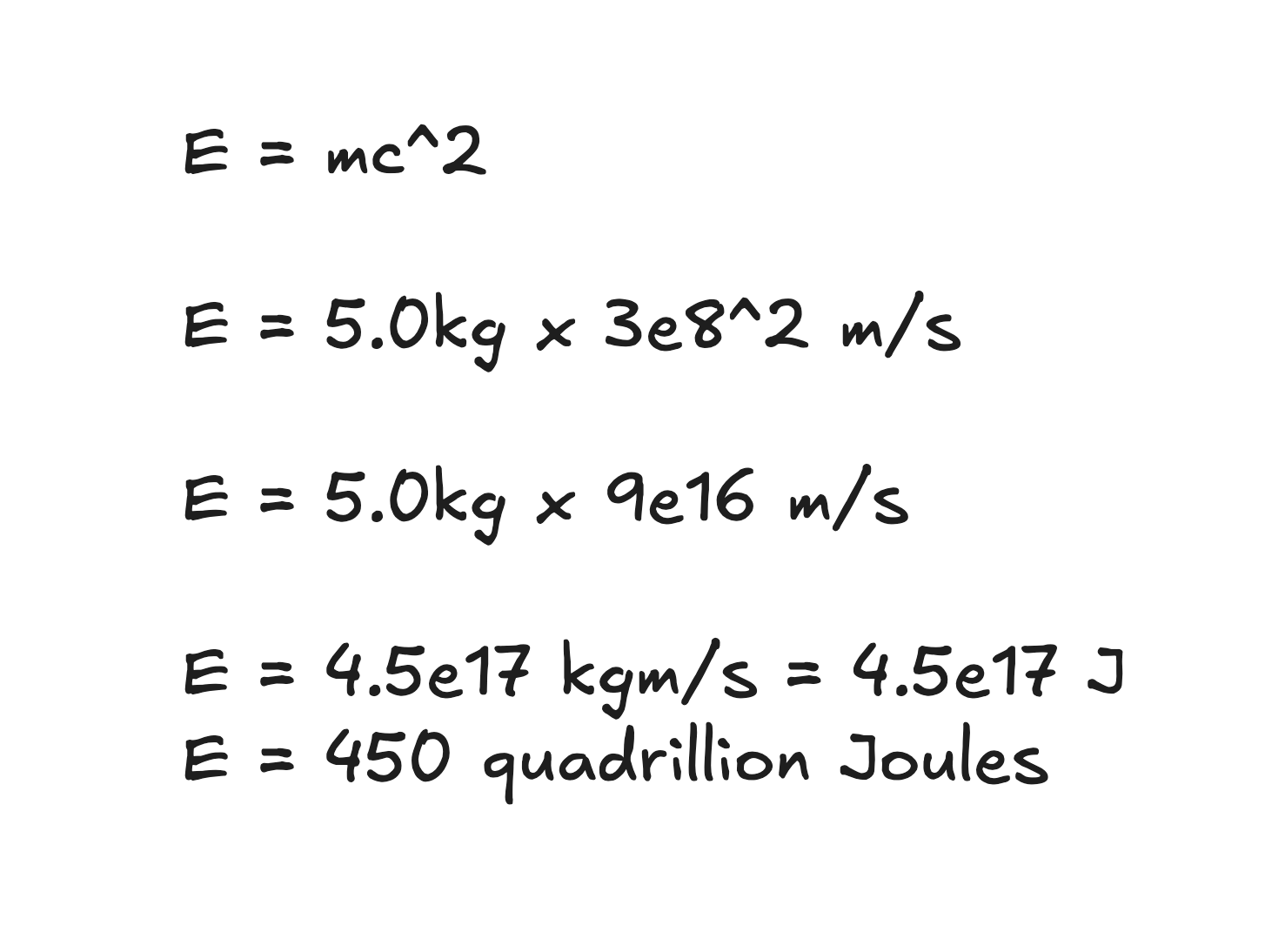

3e8

- assemble a wormhole network

- wormhole

- speculative

- requires a lot of overhead transport to setup?

- requires complete understanding of the wormhole is it even exists

- use ASI to automate

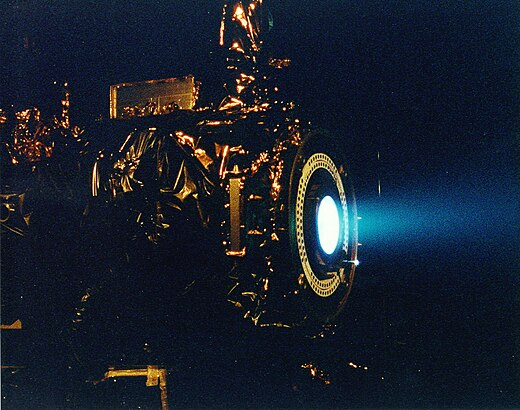

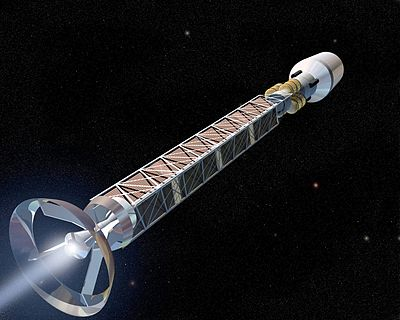

- ion thrusters

- ion thruster

- need more energy on board to push electrons

- antimatter propulsion

Energy

-

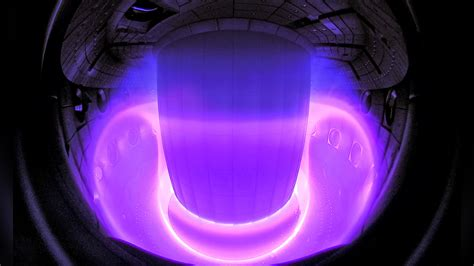

- tokamak

- railgun

black hole harvesting

solar (during travel)

energy transfer

- electricity

- superconductors

- electricity

energy storage

- batteries

- stars

- black holes (to harvest later)

Solving Intelligence

understanding consciousness

- understand the brain

- neuroscience + electro-chemisry

- find aliens

- long distance lens / communication

- space travel and exploration near alien-like solar system clusters

- cryogenic sleep

- AI autopilot

- sustainable energy for life support & propulsion (nuclear fusion)

- understand the brain

ASI

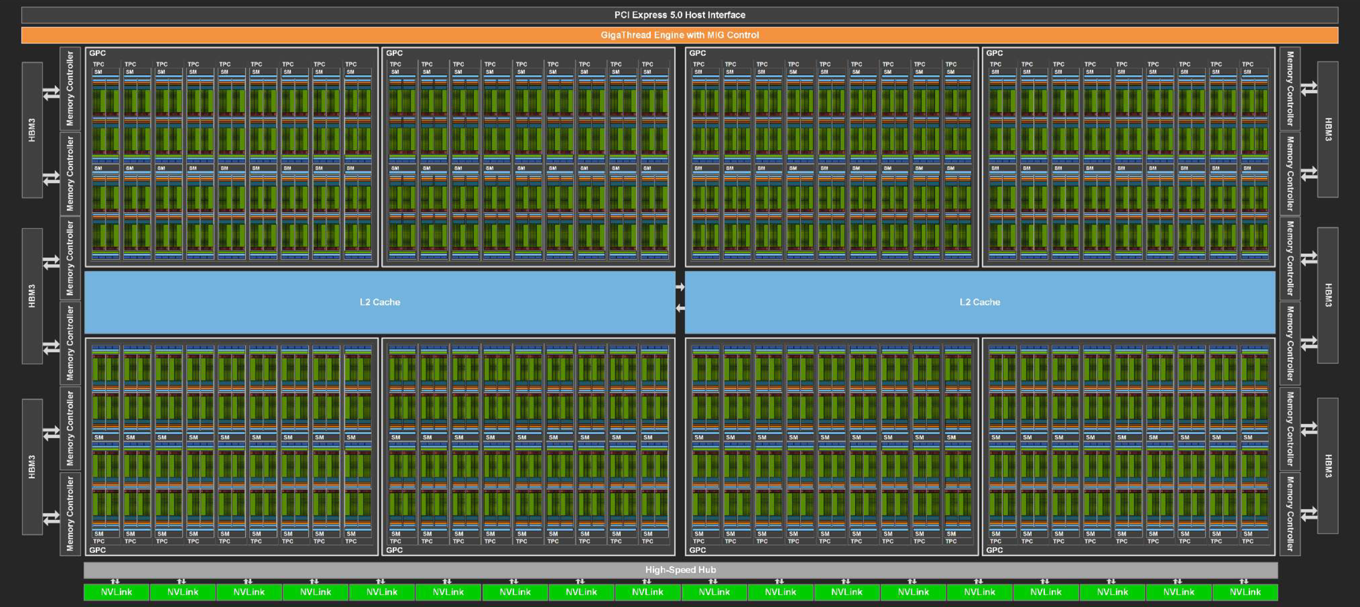

compute infrastructure

- robot construction

- automated chip design

- sustainable energy for compute

- materials required for large clusters

- asteroid mining

- assembly theory level autonomy in the limit

- on board energy source for propulsion

- powerful AGI should be able to automate all of the non-physical aspects of this

- simulations

- asteroid mining

self-improving AI

- alignment / safety

- what 2026 looks like? https://www.lesswrong.com/posts/6Xgy6CAf2jqHhynHL/what-2026-looks-like

- https://situational-awareness.ai/

- situational awareness

- lesswrong

- https://www.alignmentforum.org/s/mzgtmmTKKn5MuCzFJ

- rich sutton's views on alignment/safety

- https://darioamodei.com/machines-of-loving-grace

- mech interp

- Alignmentforum.org

- Tools

- TransformerLens

- Neuroscope

- 200 different problems in mech interp as see in the post

- Superposition and Polysemanticity

- Neuron polysemanticity is the observed phenomena that many neurons seem to fire (have large, positive activations) on multiple unrelated concepts. Superposition is a specific explanation for neuron (or attention head) polysemanticity, where a neural network represents more sparse features than there are neurons (or number of/dimension of attention heads) in near-orthogonal directions.

- Neel nanda pioneered this stuff Neel Nanda

- Einsum and einops to avoid bugs

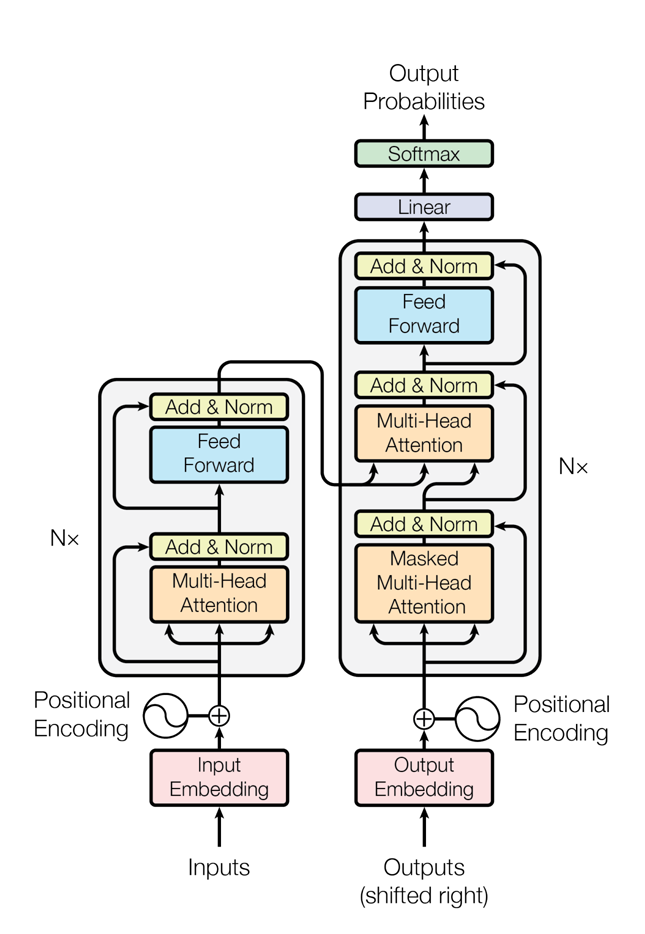

- Induction heads

- The induction head pays attention to a token in the past (let's call it A).

- It then looks for the next occurrence of A in the sequence.

- If it finds A again, it predicts that the token that followed A the first time will likely follow A again.

- so sort of like how we "induce" something or do an induction proof. its not direct, but we can use our axioms to declare that since something "over there" happened, we infer something "here" also happened similarly.

- example: "Also did you know..." - "Also lets jump back to..."

- sparse autoencoders

- AGI

define AGI

- books

- superintelligence - Nick Bostrom

-

- Life 3.0 - Max Tegmark

- Be sure to read "The Tale of the Omega Team"

-

- superintelligence - Nick Bostrom

-

- papers / articles

- modalities

- text

- image

- audio

- video

- touch

- creativity

- embodiment (requiring a human by its side to operate)

Minecraft AIs - - Reddit - Dive into anything -

-

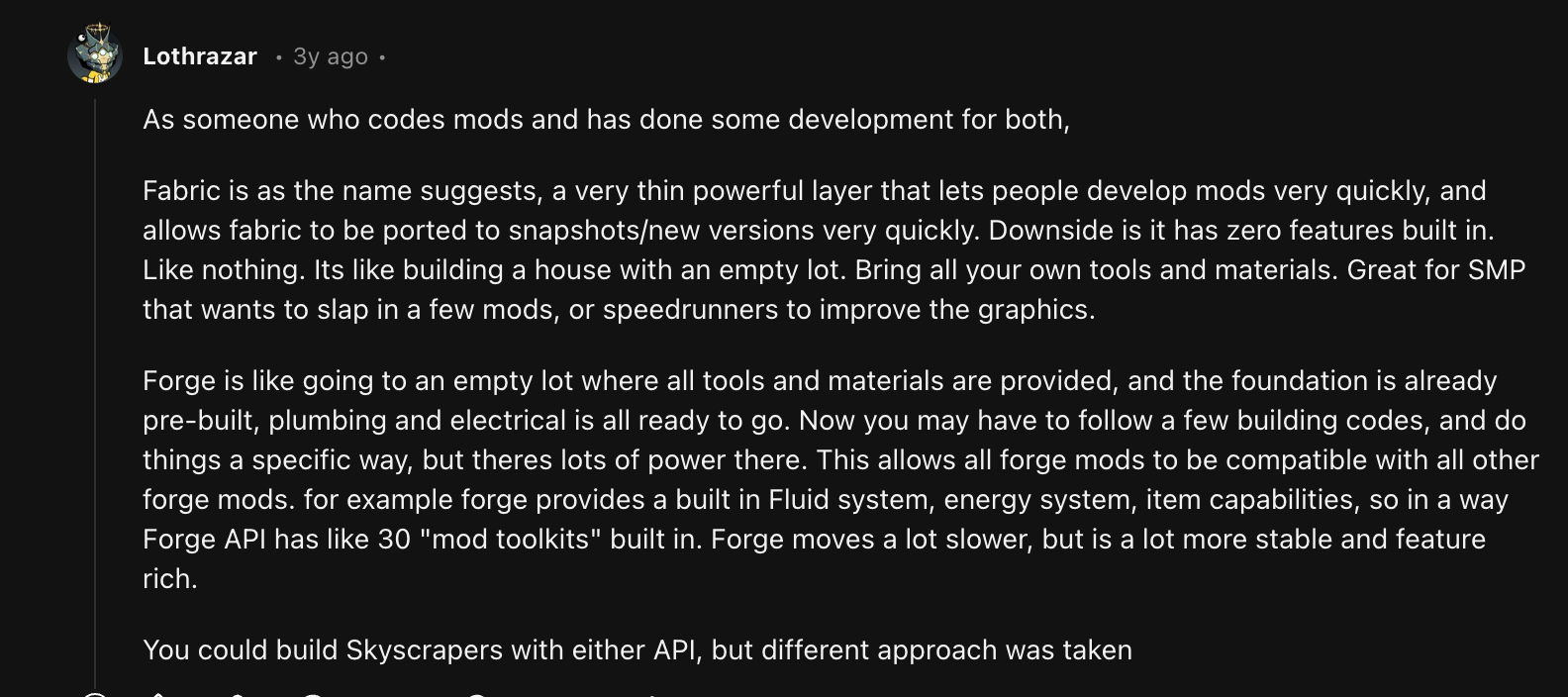

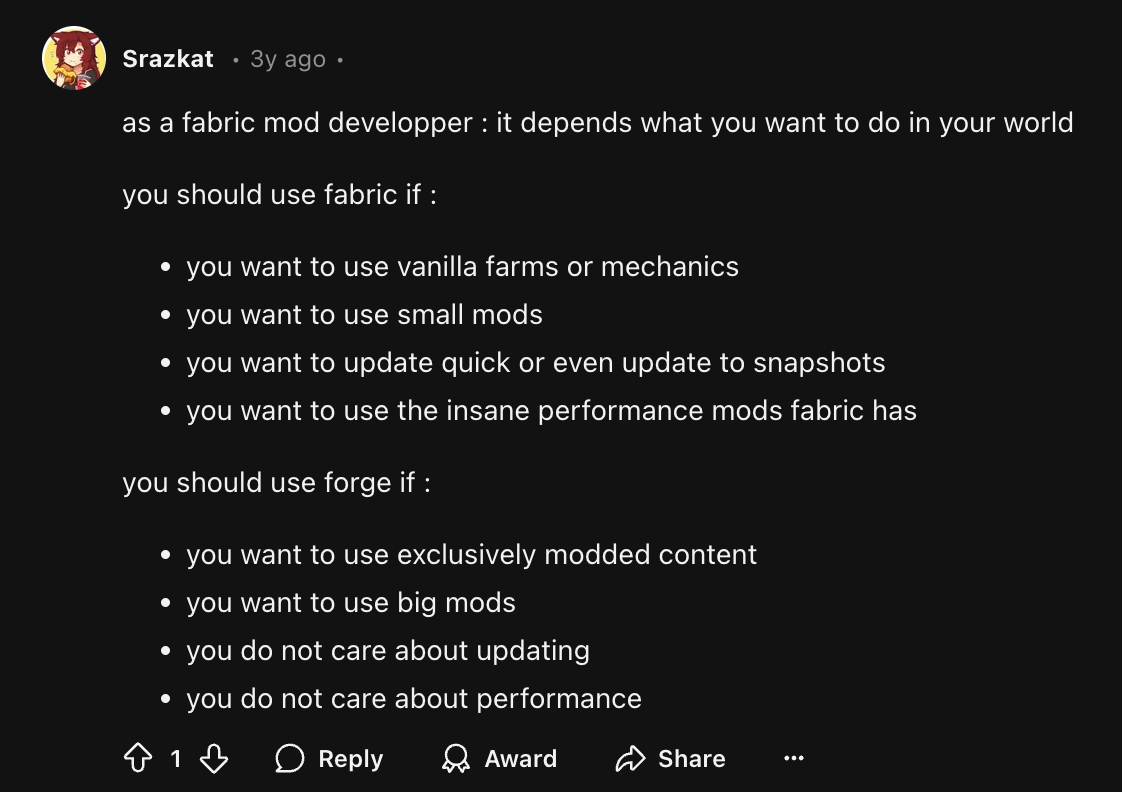

-  - ok so i should probably go with fabric if im not reverse engineering minerl or malmo (latest versions, top performance, modularity, works across all OSes)

- the idea is to replicate minerl down to game ticks (forgot to define that i was aiming for this from the start). other non-perfectly synced options work but it wont make me feel complete.

- GitHub - FabricMC/fabric-example-mod: Example Fabric mod is what im starting with

- need both the fabric installer (run with java -jar filename...) and www.curseforge.com/minecraft/mc mods/fabric api/download/5897810

- ok so i should probably go with fabric if im not reverse engineering minerl or malmo (latest versions, top performance, modularity, works across all OSes)

- the idea is to replicate minerl down to game ticks (forgot to define that i was aiming for this from the start). other non-perfectly synced options work but it wont make me feel complete.

- GitHub - FabricMC/fabric-example-mod: Example Fabric mod is what im starting with

- need both the fabric installer (run with java -jar filename...) and www.curseforge.com/minecraft/mc mods/fabric api/download/5897810- paste from google keep: - for local minecraft nn, consider implementing implicit learning so i can simply type a certain key when i come across a type of mob. then the neural net can learn what im looking at. the real problem comes in when we need to draw a bounding box. if we only click when looking at it, the neural net may not learn explicit feature that defined a mob (green color change defines a creeper). still useful though

- humanoid

-

-

-

- dog

-

- car

-

-

-  -

-

- aircraft

-

-

-  -

-

non-performance (reasoning) breakthroughs >= transformers

other paradigms to make machines intelligent/learn

- no backpropagation

- no gradient descent

- no loss functions

- evolutionary algorithms

compute effect of using more powerful AIs to assist with AGI research

audio models

video models

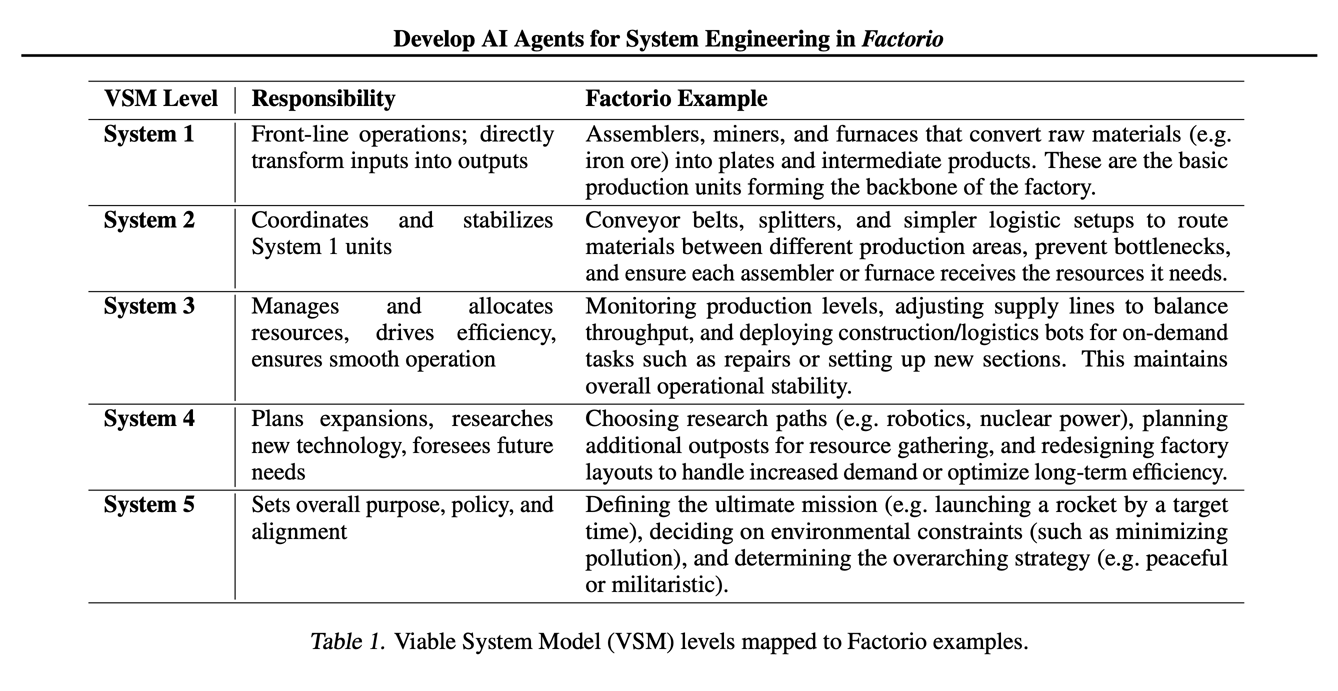

AI agents

- environments

- minerl & minerl github

- openai gym - openai gym github - paper

- agents performance

- environments

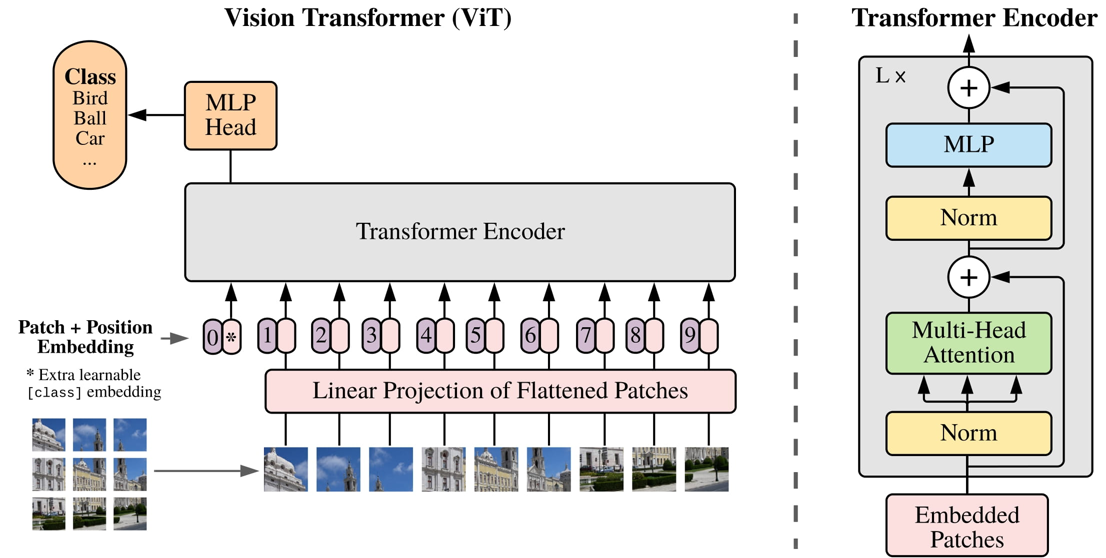

vision models

- yolo (you only look once)

- vision transformers (ViTs)

training / inference / CUDA

- kernel generation:

- kernel gen

- - x.com/i/grok/share/dT7QTvzfbE2FW8l9vnXNJ8VHT

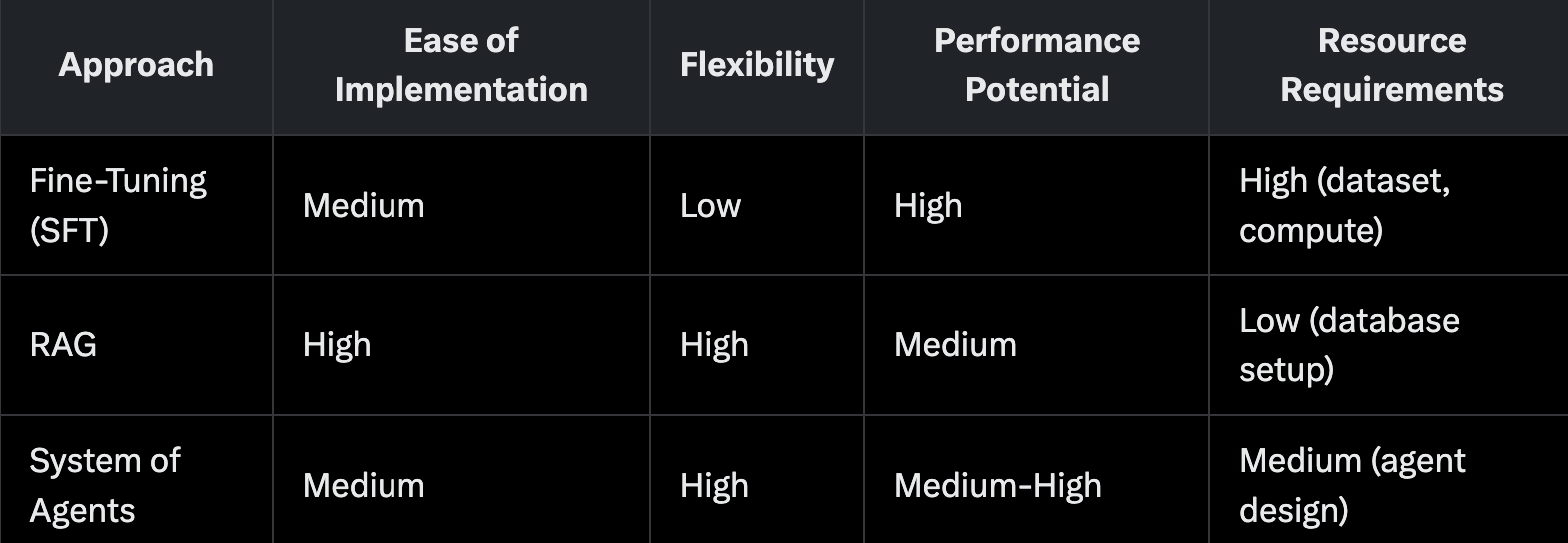

- MY QUESTIONS TO GROK: - if i wanted to completely automate CUDA kernel generation with reasoning LLMs. my thought process is a bit scramble but looks like this: you ask the llm to generate a faster kernel with a text prompt (optional) along with some pytorch code. you take some input shape, do a bunch of operations and get an output shape to the final result. the idea is instead of seperately launching fast kernels, we fuse them into a SINGLE fast kernel. going lower level a model like deepseek-r1 would output some GPU code and we would have a verifier to ensure the gpu results match pytorch results. we would also have a speedup counter to see how much better the current optimization was from the past state. if the results dont match, we would feed it back through a loop where the prompt is customized to fix the script and encourage different approaches to figuring out why they dont match (error checking macros, stride/indexing errors, incorrect reductions, numerical instability, etc). once the results do match, we compare performance. performance is the other feedback loop which consists of a different set of prompts to optimize further (ill design this later). we could reformat the prompt each time for both ensuring correctness, and that the increase in performance during the optimization phase is done properly. ideally we would port each WORKING optimzation to different scripts with a specific name generated at random (adjective_noun). ANYWAYS... curious to hear your feedback on this - suppose this would be easier if we easier put more effort into a finetuning (SFT) a reasoning model on the CUDA compatibility matrix, device stats like ./deviceQuery from cuda-samples, etc. or use RAG, or just have a system of agents that places this info directly into the context window and figures out step by step (different agents have different system prompts as to how they take data and think up some good optimizations) - we actually would only need maybe flash attention2 and 3, thunderkittens, cutlass, and a couple (2) other projects. the rest could be RL because output verification and performance optimization are VERY EASY when it comes to crafting reward functions. like kernelbench from stanford for example: https://arxiv.org/pdf/2502.10517v1

- kernelbench

- github

- arxiv

- guide for distributed computing (huggingface)

- attention

- general

- inference only

- optimizers

existing techniques

- distillation

- token-level distillation

- online logit distillation

- Mixture-of-Experts

- learning hacks to shorten training time

- quantization (int4/fp4 optimal?)

- tokenization hacks

- test-time compute (reasoning & think tokens)

- reasoning in latent space

- embeddings / pos enc

- RLHF (ppo/dpo)

- Learning to Summarize from Human Feedback

- Deep Reinforcement Learning from Human Preferences

- Fine-Tuning Language Models from Human Preferences

- Training Language Models to Follow Instructions with Human Feedback

- Scaling Laws for Reward Model Overoptimization

- Direct Preference Optimization: Your Language Model is Secretly a Reward Model

- RL (in general)

- diffusion models

- synthetic data

- base transformer additions

- differential attention (for attention noise reduction)

- Kolmogorov-Arnold-Network (KAN) - KANs - KAN 2.0 - FastKANs - paper - code - FasterKANs - code

- normalizations - layernorm - rmsnorm - postnorm vs prenorm

- distillation

- alignment / safety

-

-